CrewAI Explained: Coordinating Teams of AI Agents

How multi-agent systems collaborate, communicate, and execute complex tasks through CrewAI’s orchestration framework.

For years, we’ve trained large language models to perform individual tasks generate text, summarize data, or produce code on demand. But real-world systems don’t operate in isolation. They require coordination, context awareness, and dynamic decision-making.

That’s where CrewAI comes in.

CrewAI is an emerging framework designed to coordinate multiple autonomous agents that collaborate toward shared goals. Instead of relying on a single LLM prompt chain, CrewAI structures computation into modular, role-based components that communicate through a controlled protocol much like distributed microservices in traditional software systems.

What Is CrewAI?

At its core, CrewAI is a multi-agent orchestration layer. It enables agents powered by LLMs or fine-tuned models to act as independent cognitive units that can:

Interpret high-level objectives

Decompose problems into subtasks

Exchange context and results

Evaluate and refine outputs iteratively

Each agent in CrewAI has a defined role specification, capability scope, and execution policy. Agents share information through a shared memory bus an abstraction that allows persistent context and inter-agent communication without overwhelming the system token limits.

This architecture moves beyond traditional automation into the territory of autonomous, self-coordinating intelligence.

System Overview: How CrewAI Works

CrewAI’s runtime can be visualized in four key layers:

1. Task Definition Layer

This layer parses the user’s high-level instruction (Example - “Generate a full competitive market analysis for AI agent startups”) and transforms it into structured subtasks with dependencies.

CrewAI uses an internal Task Graph, where each node represents a sub-objective and each edge represents an inter-agent dependency or data flow.

2. Agent Initialization Layer

Agents are instantiated with configurations that define their:

Prompt templates or model instructions

Tool access policies (APIs, search, databases)

Memory retrieval methods (vector stores or RAG backends)

Inter-agent communication schema (message queues, event triggers)

Each agent essentially becomes a stateful cognitive process, maintaining short-term and long-term memory contexts.

3. Communication & Coordination Layer

CrewAI uses a Pub/Sub messaging architecture to handle agent-to-agent communication. Messages may include structured data (JSON), semantic embeddings, or control signals (Example - task handoff, error propagation).

This ensures scalable and asynchronous interaction between agents, similar to distributed systems that rely on message brokers like RabbitMQ or Kafka.

4. Supervision & Feedback Layer

A Supervisor Agent or a meta-controller monitors task progress, validates responses, and applies reinforcement signals when necessary.

It can implement critic loops, where outputs are evaluated using scoring functions or rule-based heuristics before being accepted into the final output stream.

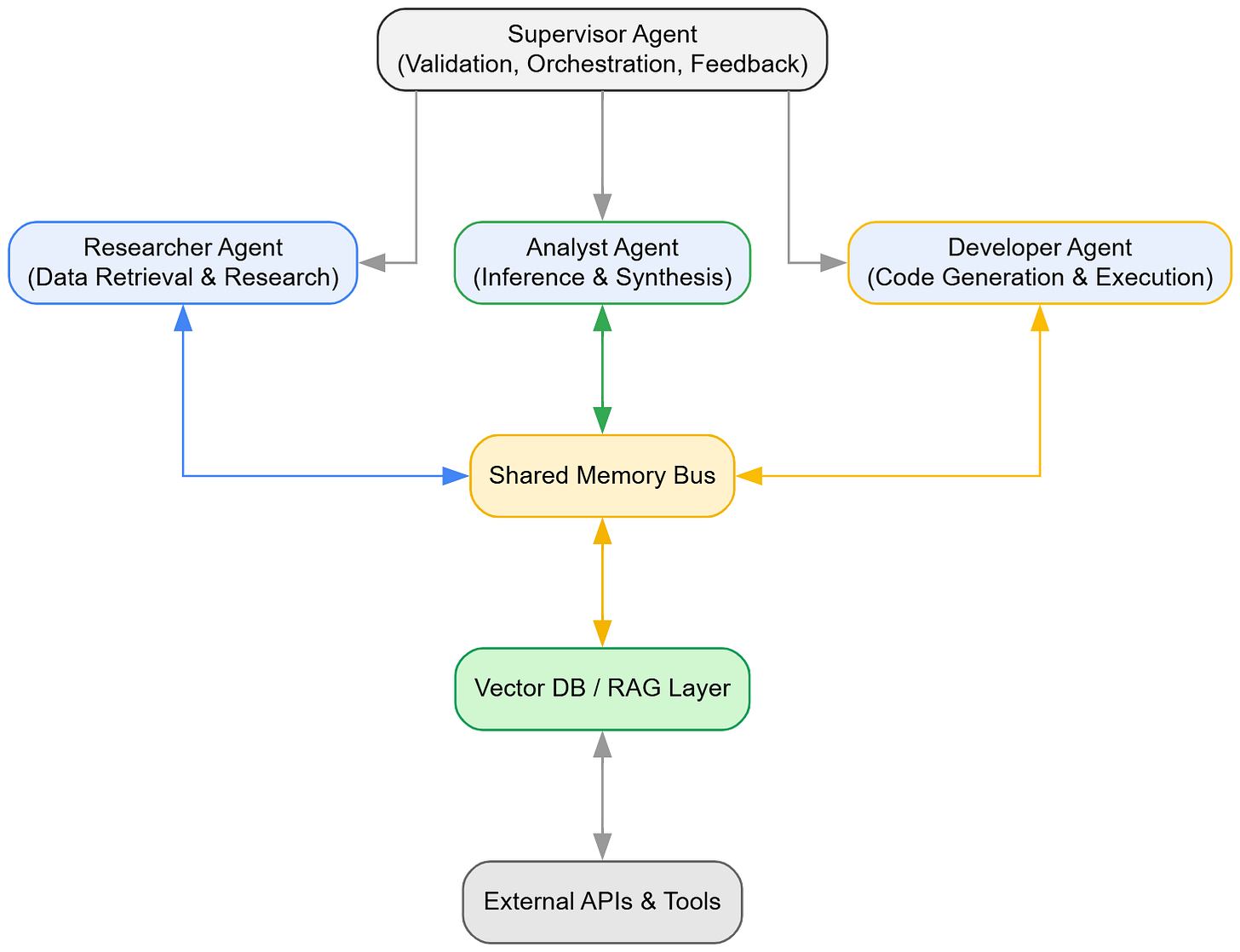

CrewAI Architecture (Conceptual Overview)

Visualizing how specialized AI agents interact through shared memory and orchestration layers.

Why CrewAI Matters

CrewAI operationalizes the idea of distributed cognition dividing complex reasoning across multiple autonomous yet cooperative components.

Instead of a single model overfitting to one task, you get:

Role-specialized intelligence: Agents trained or instructed for narrow, high-performance roles.

Parallelized execution: Agents work asynchronously on different subtasks, optimizing total task latency.

Redundancy & validation: Multiple agents can evaluate or cross-verify results, reducing hallucination risk.

Context continuity: Shared memory enables long-horizon reasoning across multiple steps.

In production scenarios, CrewAI unlocks:

Enterprise automation: Agents for research, summarization, analysis, and reporting.

AI devops pipelines: Autonomous QA, deployment checks, and observability monitoring.

Collaborative intelligence systems: Agents that negotiate, plan, and strategize across departments.

Technical Deep Dive

Dynamic Role Allocation: CrewAI supports spawning or retiring agents at runtime, enabling adaptive resource allocation.

Persistent Vector Memory: Uses embedding-based retrieval to allow contextual continuity across tasks.

Tool Augmentation: Agents can invoke APIs, use SQL databases, or access document stores through controlled connectors.

Critic–Evaluator Loops: Supervisor agents can apply reinforcement logic using grading functions or reward metrics.

State Serialization: Each agent’s internal state can be checkpointed, versioned, or replayed enabling fault tolerance and reproducibility.

These mechanisms make CrewAI not just a coordination layer, but a runtime framework for agentic intelligence at scale.

Frequently Asked Questions

Q1. How does CrewAI compare to LangChain or AutoGen?

LangChain focuses on LLM chaining and tool invocation. AutoGen introduced agent-to-agent conversations. CrewAI formalizes team-level orchestration defining structure, roles, and inter-agent dependencies within a cohesive runtime.

Q2. Can CrewAI handle multimodal agents?

Yes. Each agent can attach a different model endpoint text, vision, or audio enabling cross-modal coordination in tasks like perception-driven planning or media generation.

Q3. Is CrewAI production-ready?

CrewAI is still evolving but supports stable Python APIs and integrations with OpenAI, Anthropic, and Gemini. Developers can deploy it with Docker or integrate it into existing LLMOps stacks.

At The Agentic Learning, we go beyond theory.

We teach how to design, build, and deploy agentic architectures like CrewAI from initial concept to production-grade orchestration.

Join our live technical webinars and hands-on implementation courses to:

Build your first multi-agent workflow

Integrate RAG, memory, and supervision layers

Scale orchestration with asynchronous control loops

Start learning today: www.theagenticlearning.com

The future of AI won’t be built by a single monolithic model it’ll be coordinated by crews of intelligent agents, working in sync, just like us.