From Science Fiction to Your Inbox: How AI Agents Are Quietly Running the World’s Biggest Companies

Forget chatbots autonomous AI agents are already making million-dollar decisions, debugging code at 3 AM, and handling your customer complaints.

The Silent Revolution: Agents Among Us

While everyone debates whether AI will take our jobs, something more fascinating is happening: AI agents are already working alongside humans at scale, and the results are nothing like we expected.

From Silicon Valley startups to Fortune 500 enterprises, organizations are deploying autonomous agents that don’t just assist they decide, execute, and learn. These aren’t theoretical experiments. They’re production systems handling millions of transactions, and their success stories (and failures) offer invaluable lessons for anyone building with AI.

What Makes an Agent “Wild”?

Before diving into deployments, let’s clarify what we mean. An AI agent isn’t just a chatbot or automation script. It’s a system that:

Perceives its environment through APIs, databases, or sensors

Makes autonomous decisions based on goals, not just rules

Takes actions that affect real business outcomes

Learns and adapts from feedback over time

When these agents escape the lab and enter production, they become “wild”operating in messy, unpredictable real-world conditions.

Real Deployments That Changed the Game

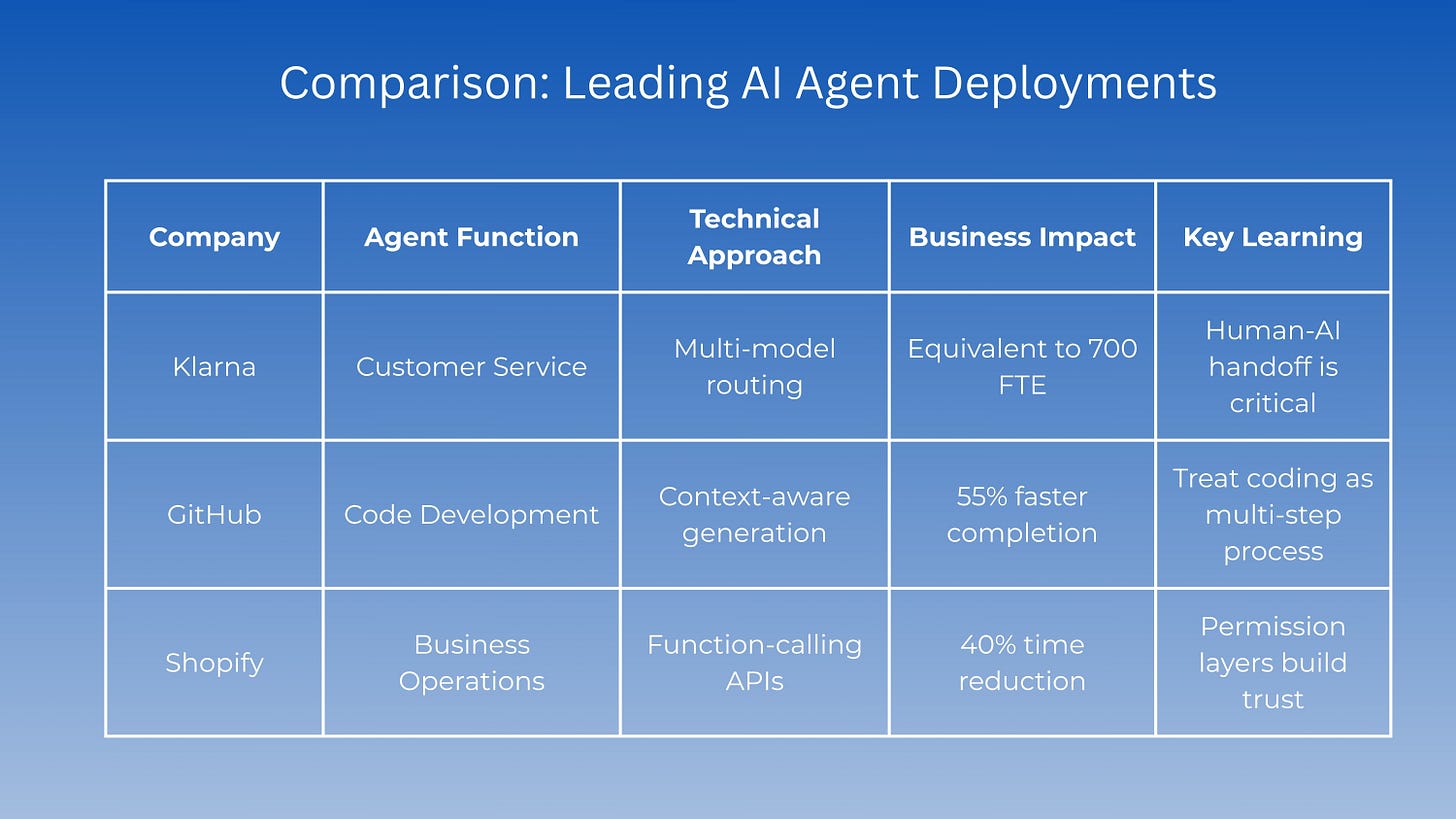

1. Klarna’s Customer Service Revolution

Swedish fintech giant Klarna deployed an AI agent that now handles customer service inquiries equivalent to 700 full-time employees. The technical breakthrough? A sophisticated routing system that knows when to escalate to humans, coupled with real-time learning from every interaction.

The non-technical lesson: Success wasn’t about replacing humans it was about creating a seamless handoff. The agent handles routine queries instantly while humans tackle complex edge cases, and both sides learn from each other.

2. GitHub Copilot Workspace: Code That Writes Itself

Beyond autocomplete, GitHub’s deployment of agent-based development tools shows AI planning entire features, debugging across multiple files, and even reviewing its own code. The system maintains context across entire repositories.

The breakthrough: Treating code generation as a multi-step agentic process rather than single predictions. The agent proposes, revises, and validates mimicking how senior developers actually work.

3. Shopify’s Sidekick: Your AI Business Partner

Shopify’s agent doesn’t just answer questions it takes actions. It can analyze sales trends, adjust inventory, create marketing campaigns, and optimize store layouts. The technical architecture uses function-calling to interact with dozens of Shopify APIs.

The critical insight: Permission layers matter. The agent can suggest anything but only executes low-risk actions autonomously. High-stakes decisions require human approval, creating a trust gradient.

Technical Foundations: What’s Under the Hood

The Architecture Stack

Successful agent deployments typically combine:

Large Language Models (LLMs) for reasoning and natural language understanding

Function-calling frameworks to interact with external tools and APIs

Memory systems (vector databases, conversation history, long-term storage)

Orchestration layers (LangChain, AutoGPT, custom frameworks)

Safety guardrails (content filters, action validators, rollback mechanisms)

The Engineering Challenges

Real deployments revealed unexpected technical hurdles:

Reliability: LLMs are probabilistic. Production agents need error handling, retries, and graceful degradation when models hallucinate.

Latency: Multi-step agent reasoning can take seconds or minutes. Successful deployments either embrace async workflows or optimize with model distillation and caching.

Cost: Agent loops can rack up API costs quickly. Production systems implement budget limits, caching strategies, and hybrid approaches mixing small and large models.

Non-Technical Lessons From the Trenches

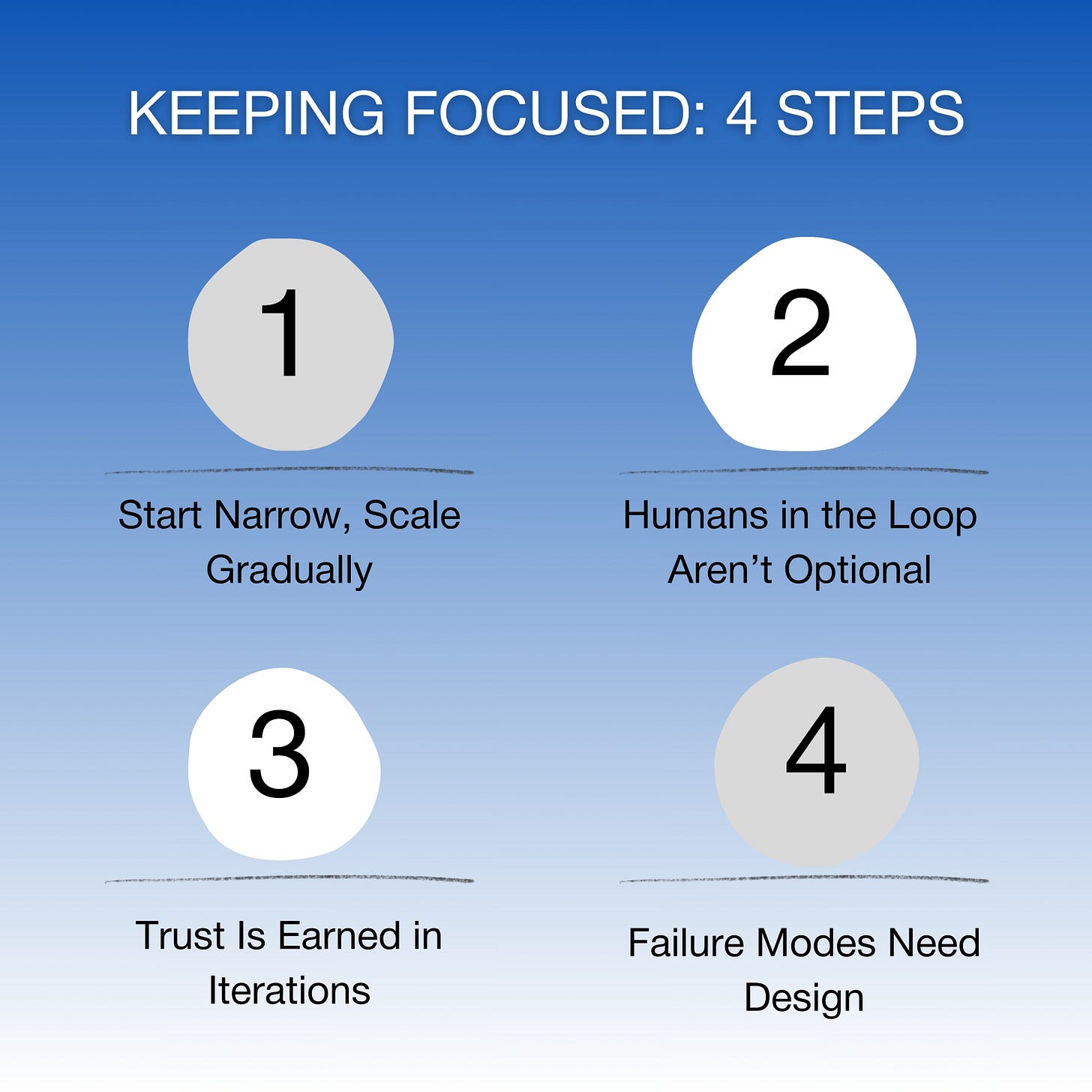

1. Start Narrow, Scale Gradually

Every successful deployment started with a tightly scoped use case. Intercom’s customer support agent began handling only password resets before expanding to billing questions, then account issues. Breadth came after proving depth.

2. Humans in the Loop Aren’t Optional

The highest-performing systems maintain human oversight, but smartly. Instead of reviewing every action, they use confidence scoring to flag uncertain decisions. An agent might handle 95% of cases autonomously but route 5% to humans for review.

3. Trust Is Earned in Iterations

Organizations that succeeded rolled out agents gradually first to internal teams, then to power users, finally to everyone. Each phase built confidence and revealed edge cases that pure testing missed.

4. Failure Modes Need Design

When agents fail, they should fail gracefully. The best deployments built explicit fallback paths: escalation to humans, rollback mechanisms, and clear communication about limitations.

The Emerging Patterns of Success

After analyzing dozens of production deployments, clear patterns emerge:

Multi-agent systems outperform single agents: Rather than one superintelligent agent, successful teams deploy specialized agents (researcher, writer, validator) that collaborate.

Domain-specific fine-tuning matters: Generic LLMs work for prototypes, but production systems benefit from fine-tuning on company-specific data and workflows.

Observability is critical: You can’t improve what you can’t measure. Successful deployments instrument everything decision paths, latency, user satisfaction, override rates.

What’s Next: The Wild Frontier

The next wave of agent deployments is pushing boundaries:

Autonomous code review agents at major tech companies

Medical diagnosis assistants working alongside doctors

Financial analysis agents managing investment portfolios

Scientific research agents formulating and testing hypotheses

These aren’t future predictions they’re happening now, in production, affecting real outcomes.

FAQs

Q: How do I know if my use case is ready for agents?

A: Look for tasks with clear success criteria, available APIs or data sources, and tolerance for 90-95% accuracy. If your process can handle occasional errors gracefully, you’re a good candidate.

Q: What’s the biggest difference between building a chatbot and an agent?

A: Chatbots respond; agents act. Agents need function-calling capabilities, error handling, and often multi-step reasoning. They’re architecturally more complex but orders of magnitude more capable.

Q: How much does it cost to deploy an AI agent in production?

A: Costs vary wildly from hundreds per month for simple agents to tens of thousands for high-volume, complex systems. The biggest cost is usually LLM API calls, followed by infrastructure and human oversight.

Q: Should I build custom or use existing frameworks?

A: Start with frameworks (LangChain, LlamaIndex, AutoGPT) to prove concepts quickly. As you scale, you’ll likely need custom components for your specific reliability, latency, or cost requirements.

Q: What’s the biggest risk in deploying agents?

A: Over-automation. The temptation is to let agents do everything, but the best deployments maintain human judgment for high-stakes decisions while automating the routine work that bogs down teams.

Ready to Deploy Your First Agent?

The era of AI agents isn’t coming it’s here. Companies that learn from these early deployments will build the competitive advantages of the next decade.

Don’t wait for perfect conditions. Start with a small, well-defined problem. Build your agent. Deploy it to a limited audience. Learn from real usage. Iterate ruthlessly.

The agents are already in the wild. The question isn’t whether to deploy it’s whether you’ll learn from those who went first.

Start Building Your AI Agent Today

Want to dive deeper? Subscribe to our newsletter for weekly breakdowns of production AI deployments, technical deep-dives, and lessons from the companies building the future.

The future of work is not human vs. AI it’s humans and agents, working together in ways we’re only beginning to understand. Join the pioneers.